Workshop: Introduction to the design and analysis of experimental evaluations (Online 24 & 28 June 2024)

Workshop: Introduction to the design and analysis of experimental evaluations

Date and time: Monday 24 June & Friday 28 June 2024 9.30am to 12.30pm AEST (registration from 9.15am) Registrants are to attend both sessions. (full day workshop - 2 sessions)

Venue: Via Zoom. Details will be emailed to registrants just prior to the workshop start time

Facilitators: Dr Vera Newman and Peter Bowers, Australian Centre for Evaluation (ACE)

Register online by: Friday 21 June 2024. Spaces are limited to 25 participants

Fees (GST inclusive): Members $295, Organisational member staff $415, APS staff $425, Non-members $485, Student member $140, Student non-member $226. Students must send proof of their full-time student status to

Note for APS staff: APS staff that are NOT individual members or organisational staff members should register as APS staff. If you're unsure if your Department is an organisational member email:

Workshop Overview

There are a wide range of methods and approaches to impact evaluation. This course will help participants build their skills in one of those methods – experimental evaluations, also known as randomised controlled trials. This is an intermediate-level course, which assumes a basic understanding of key statistical concepts, and some prior experience with evaluation generally (but no prior experience with experimental evaluation). While a large part of the course will focus on quantitative analysis of experimental evaluations, it will also address the value of mixed method experimental evaluations incorporating qualitative methods.

The course will be delivered by the Australian Centre for Evaluation (ACE), which is a branch of the Australian Treasury. The ACE was established in mid-2023 to improve the volume, quality and use of evaluation evidence across government. This training will be delivered by staff with expertise and experience in experimental methods.

Workshop Content

This course will equip evaluators who are new to experimental evaluation with the basic skills required for experimental evaluation design and analysis. Upon course completion, participants should be able to work on experimental evaluation under the supervision of a more experienced evaluator.

Course participants will learn how to prepare an experimental research design that provides a coherent link to the objectives of an evaluation. In particular, they will learn what evaluation questions – regarding causal linkages – can be answered by an experimental evaluation.

Participants will also learn how to apply randomisation to produce estimates of the causal impact of programs or policies on short-, medium- or long-term outcomes. They will also learn how to avoid risks of bias that can emerge within experimental designs, and the value of using mixed method experimental evaluations. Finally, participants will learn how to employ valid quantitative methods for the analysis of experimental evaluations. This will include a focus on the interpretation of impact estimates and confidence intervals (including ‘null’ results), and how to avoid the misuse of statistical significance testing.

Workshop Outcomes

After completing this course, participants will:

-

Understand what questions an impact evaluation can answer, the role of the counterfactual, and the risk of bias in impact evaluations. They will also be briefly introduced to quasi-experimental evaluation methods.

-

Understand the basics of the feasibility and design of randomised controlled trials (RCTs) including: outcomes and data, randomisation methods, risks of bias, ethical considerations, power analysis and pre-analysis plans.

-

Understand the basics of RCT analysis and reporting including: methods of analysis, interpretation of results, and generalisability of results.

-

Understand when they should seek additional technical expertise, and know what additional resources they can refer to.

-

Understand the importance of mixed-method experimental evaluations, including the role of qualitative research in such evaluations.

PL competencies

This workshop aligns with competencies in the AES Evaluator’s Professional Learning Competency Framework. The identified domains are:

-

Domain 4 – Research methods and systematic inquiry

Who should attend?

This course is targeted at evaluators and researchers who are interested in being involved in the design or analysis of experimental evaluations. It assumes an understanding of basic statistical concepts (e.g., mean, standard deviation, hypothesis testing) but no previous experience with experimental evaluation.

Prior to course commencement, participants will receive a short primer or online references covering the statistical concepts that will be assumed throughout the course. Specifically, the primer or references will give an overview of the following:

-

Mean and standard deviation

-

Standard error

-

Statistical hypothesis testing

-

Linear regression

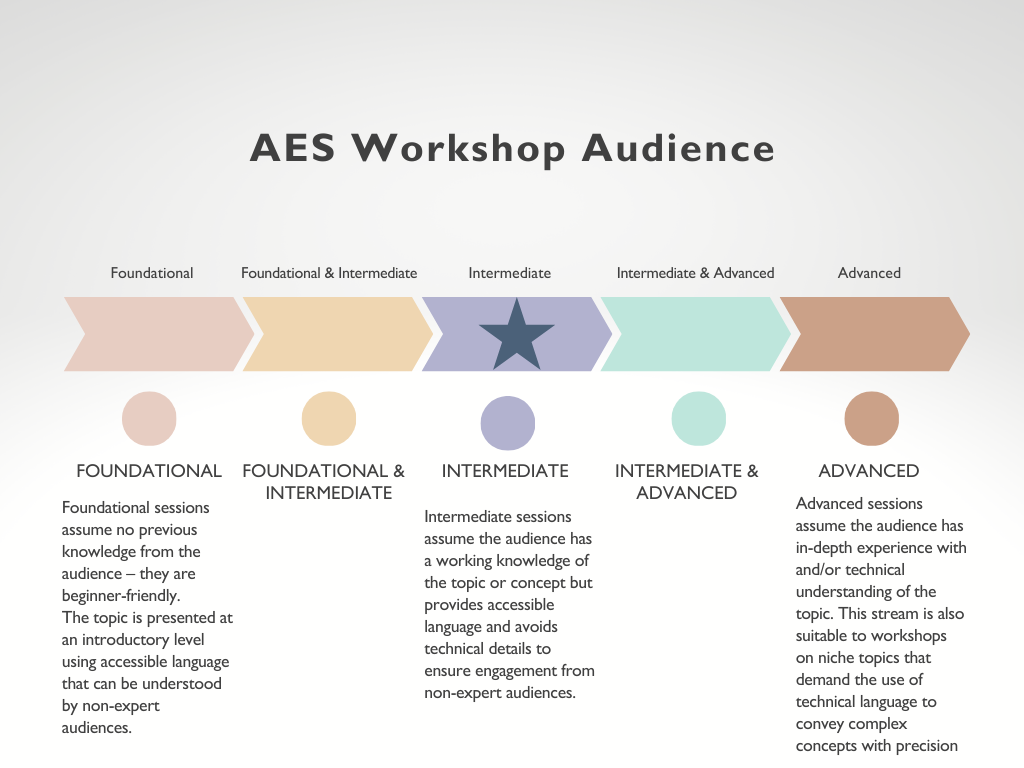

Intermediate

About the facilitators

Dr Vera Newman (PhD Psychology, B Science (Hons) / B Arts) is an Assistant Director in the Australian Centre for Evaluation. She has over six years conducting impact evaluations in the private and public sector across diverse areas of public policy, but mainly focusing on health and mental health, education, and gender diversity. She designed and analysed a series of field trials in Australian universities to support student’s outcomes, including their retention in university and their overall wellbeing. Before this, Vera conducted lab-based studies on human behaviour in universities. She has also delivered more than 7 workshops both in-person and online.

Peter Bowers (M Finance, B Economics (Hons)) is an Assistant Director in the Australian Centre for Evaluation. He has worked on impact evaluations in Kenya, Ethiopia and Indonesia, including a large-scale RCT on the effects of providing microfinance to nomadic cattle herders in East Africa. He has delivered workshop training in impact evaluation as part of a professional development course for public servants from Indonesia, and has also delivered training to Masters-level students at MIT in the US.

Workshop start times

-

VIC, NSW, ACT, TAS, QLD: 9.30am

-

SA, NT: 9.00am

-

WA: 7.30am

-

New Zealand: 11.30pm

-

For other time zones please go to https://www.timeanddate.com/worldclock/converter.html

Event Information

| Event Date | 24 Jun 2024 9:30am |

| Event End Date | 28 Jun 2024 12:30pm |

| Cut Off Date | 21 Jun 2024 2:00pm |

| Location | Zoom |

| Categories | Online Workshops |

We acknowledge the Australian Aboriginal and Torres Strait Islander peoples of this nation. We acknowledge the Traditional Custodians of the lands in which we conduct our business. We pay our respects to ancestors and Elders, past and present. We are committed to honouring Australian Aboriginal and Torres Strait Islander peoples’ unique cultural and spiritual relationships to the land, waters and seas and their rich contribution to society.